Gemini

Overview

The Gemini connector enables your AI Colleagues to integrate with Google's Gemini AI models, facilitating automated text generation, multimodal content analysis, code execution, and web search capabilities.

Google Gemini is Google's advanced multimodal AI model that can understand and generate text, analyze images and documents, execute code, and search the web. The Gemini connector allows Leena AI to leverage these powerful AI capabilities for various automation and assistance workflows.

API Details

Leena AI integrates with Gemini via REST APIs.

Documentation link: https://developers.google.com/gemini-api

Setup

The Gemini connector supports two authentication methods: Gemini API Key and Leena AI Auth Key.

Prerequisites

Before setting up the Gemini connector, ensure you have:

- Access to Google AI Studio or Google Cloud Console

- A valid Gemini API key (if using Gemini API Key authentication)

- Access to your Leena AI workspace with connector management permissions

Get credentials

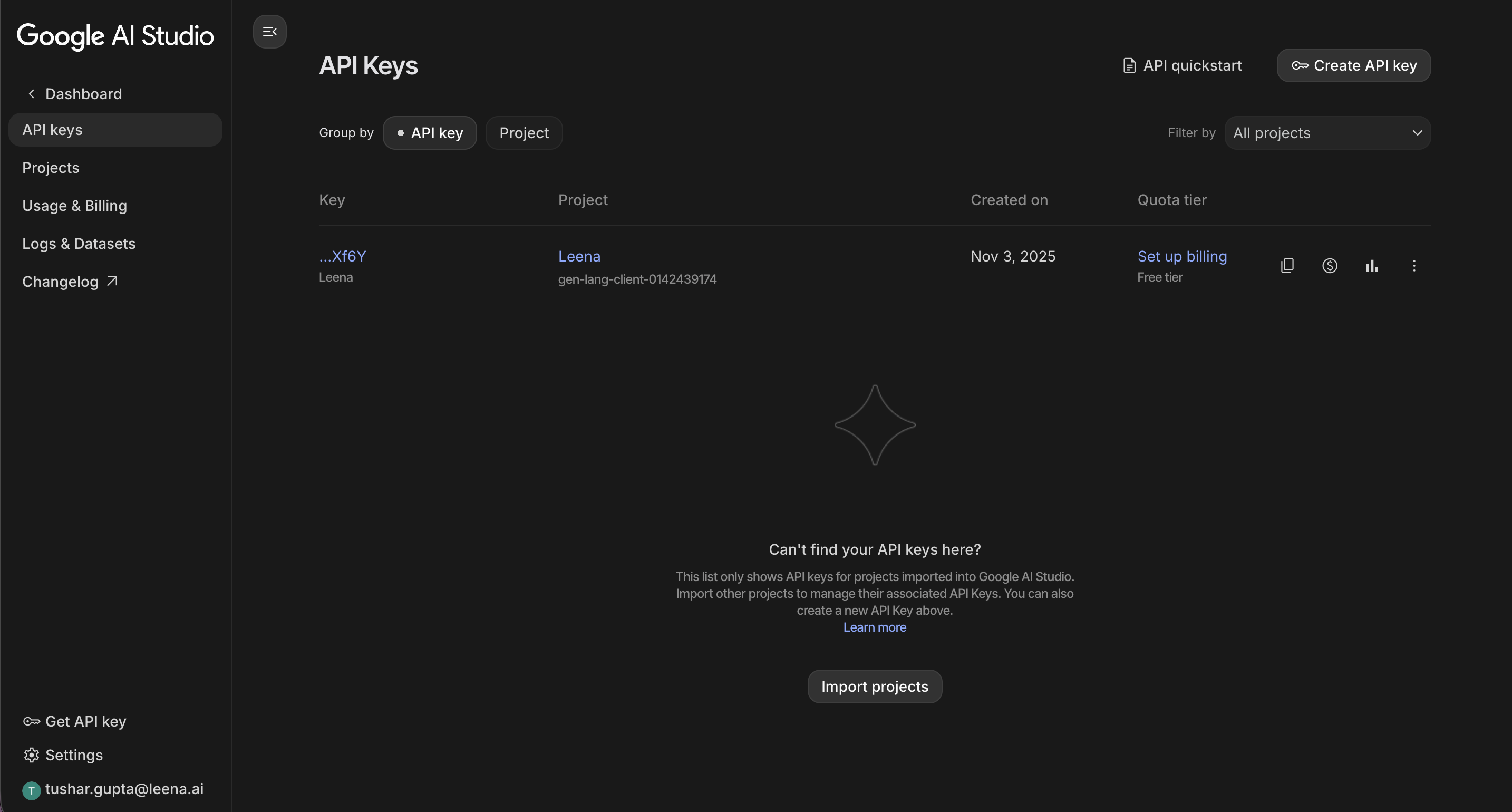

Option 1: Using Gemini API Key

Here is how to obtain a Gemini API key:

-

Go to Google AI Studio

-

Sign in with your Google account

-

Navigate to API Keys section

-

Click on "Create API Key"

-

Copy the generated API key

-

Store it securely for use in Leena AI

Option 2: Using Leena AI Auth Key

If you choose to use Leena AI's pre-configured authentication, no additional credentials are required. This option uses internally configured keys managed by Leena AI.

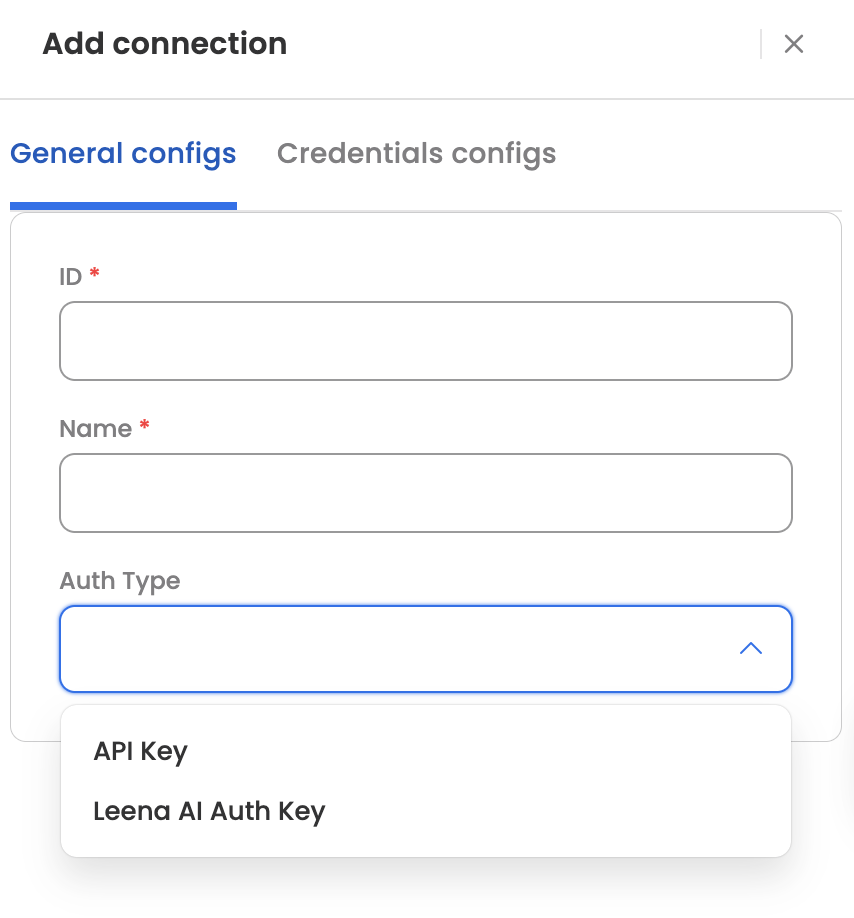

Add connection

Here is how to add a connection on Leena AI:

-

Log in to your Leena AI workspace

-

Navigate to Settings > Integrations

-

Search for "Gemini" and select it from the list to add its new connector

-

Start configuring the connector

- Authentication Type: Choose between "Gemini API Key" or "Leena AI Auth Key"

- API Key: (Required only if using Gemini API Key) Your Google AI Studio API key

-

Save the connector configuration

Actions

The following actions are supported for the Gemini connector:

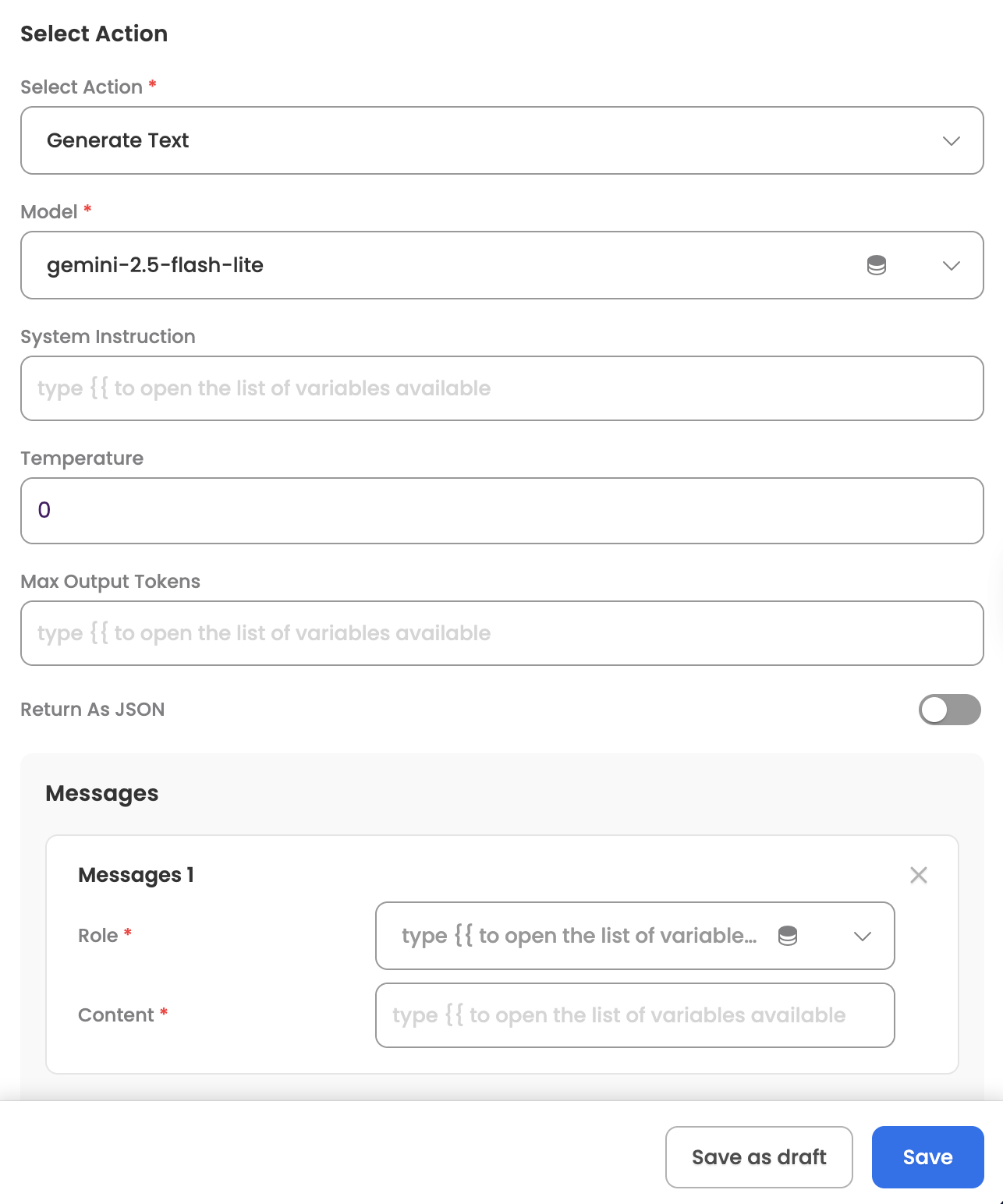

Generate Text

Generates text responses using Google's Gemini models with support for multimodal inputs (text, images, PDFs), web search via Google Search, and code execution capabilities. The Agent can leverage this action to generate content, analyze images, perform calculations, and search for current information.

Input Parameters

Here are the input parameters required to set up this action:

Mandatory

| Name | Description |

|---|---|

| Model | The Gemini model to use (dynamically fetched or pre-configured based on authentication type) |

| Messages | Array of messages with roles ('user' or 'model') and content |

Optional

| Name | Description |

|---|---|

| System Instruction | System-level instructions to guide model behavior |

| Temperature | Controls randomness in responses (0-2, where lower values are more deterministic). Default: 0 |

| Max Output Tokens | Maximum number of tokens in the response |

| Return As JSON | Whether to return response in structured JSON format. Default: false |

| Sample JSON | Example JSON structure (required if Return As JSON is true) |

| Tools | Array of tools to enable (Google Search or Code Execution) |

| MIME Type | MIME type for file attachments (for user messages with files) |

| File Data | File URL or Base64 encoded data (for user messages with files) |

Message Structure:

Each message in the Messages array contains:

- role: Either 'user' or 'model'

- content: Text content for the message

- mimeType: Optional MIME type for file attachments (for 'user' role)

- fileData: Optional file URL or Base64 data (for 'user' role)

Supported File Types:

- Images: JPEG, JPG, PNG, WebP, HEIC, HEIF

- Documents: PDF, DOC, TXT

- Input Methods: URL or Base64 encoded data

Tool Configuration:

The integration supports two built-in tools:

- Google Search: Enables web search capabilities

- Code Execution: Enables code execution for calculations and data processing

Available Models:

When using Gemini API Key authentication, models are dynamically fetched. Common models include:

- gemini-2.5-flash: Fast and efficient model

- gemini-2.5-flash-lite: Lightweight version for simple tasks

When using Leena AI Auth Key, pre-configured models are available:

- gemini-2.5-flash: Fast and efficient model

- gemini-2.5-flash-lite: Lightweight version for simple tasks

Here are sample JSON inputs:

Basic Text Generation:

{

"model": "gemini-2.5-flash",

"messages": [

{

"role": "user",

"content": "Explain how AI works in simple terms"

}

],

"temperature": 0

}With System Instruction:

{

"model": "gemini-2.5-flash",

"systemInstruction": "You are a helpful coding assistant. Always provide clear, well-commented code examples.",

"messages": [

{

"role": "user",

"content": "Write a Python function to calculate factorial"

}

],

"temperature": 0.7,

"maxOutputTokens": 500

}Multi-turn Conversation:

{

"model": "gemini-2.5-flash",

"messages": [

{

"role": "user",

"content": "What is quantum computing?"

},

{

"role": "model",

"content": "Quantum computing is a type of computing that uses quantum mechanics..."

},

{

"role": "user",

"content": "Can you give me a practical example?"

}

],

"temperature": 0

}Image Understanding:

{

"model": "gemini-2.5-flash",

"messages": [

{

"role": "user",

"content": "What is in this image?",

"mimeType": "image/jpeg",

"fileData": "BASE64_ENCODED_IMAGE_DATA"

}

],

"temperature": 0

}JSON Response Format:

{

"model": "gemini-2.5-flash",

"messages": [

{

"role": "user",

"content": "List 3 programming languages. Return the response in the format {\"languages\": [{\"name\": \"\", \"year\": 0}]}. Return parsable JSON string only with no extra text."

}

],

"temperature": 0,

"returnAsJson": true,

"sampleJson": {

"languages": [

{

"name": "",

"year": 0

}

]

}

}With Google Search Tool:

{

"model": "gemini-2.5-flash",

"messages": [

{

"role": "user",

"content": "What are the latest developments in renewable energy?"

}

],

"tools": [

{

"type": "GOOGLE_SEARCH"

}

],

"temperature": 0.7

}With Code Execution Tool:

{

"model": "gemini-2.5-flash",

"messages": [

{

"role": "user",

"content": "Calculate the first 20 Fibonacci numbers and show me the results"

}

],

"tools": [

{

"type": "CODE_EXECUTION"

}

]

}Complete Example: All Features Combined:

{

"model": "gemini-2.5-flash",

"systemInstruction": "You are a research assistant. Use web search when needed and execute code for calculations.",

"messages": [

{

"role": "user",

"content": "Analyze this chart, search for recent trends, and calculate the growth rate",

"mimeType": "image/png",

"fileData": "BASE64_ENCODED_CHART_IMAGE"

}

],

"tools": [

{

"type": "GOOGLE_SEARCH"

},

{

"type": "CODE_EXECUTION"

}

],

"temperature": 0.5,

"maxOutputTokens": 2048

}Response

Upon successful execution, the action returns:

- Generated text content

- Model role information

- Finish reason (e.g., "STOP")

- Usage metadata including:

- Prompt token count

- Candidates token count

- Total token count

- For JSON responses: Both raw text and parsed JSON fields

- For multimodal inputs: Analysis of images or documents

- For tool usage: Search results or code execution output

Sample Response Structure:

{

"candidates": [

{

"content": {

"parts": [

{

"text": "Generated response text..."

}

],

"role": "model"

},

"finishReason": "STOP",

"index": 0

}

],

"usageMetadata": {

"promptTokenCount": 12,

"candidatesTokenCount": 156,

"totalTokenCount": 168

}

}JSON Response Example:

{

"candidates": [

{

"content": {

"parts": [

{

"text": "{\"languages\":[{\"name\":\"Python\",\"year\":1991},{\"name\":\"JavaScript\",\"year\":1995},{\"name\":\"Java\",\"year\":1995}]}",

"parsedJson": {

"languages": [

{"name": "Python", "year": 1991},

{"name": "JavaScript", "year": 1995},

{"name": "Java", "year": 1995}

]

}

}

],

"role": "model"

},

"finishReason": "STOP",

"index": 0

}

]

}Updated about 2 months ago